Linguistics Colloquium, Friday 4/25

Department of Linguistics and Asian/Middle Eastern Languages

Please join us for an in-person event in the Linguistics conference room (SHW 237) on Wednesday, 4/16 at 11am! We are delighted to have Dr. Austin German, post-doctoral researcher at UChicago, give a talk titled, “The impact of interaction on lexical and sub-lexical variation in Zinacantec Family Homesign.” Please see the flyer below for the talk abstract and more details!

We are honored to welcome Dr. Claudia Holguín Mendoza, Associate Professor of Spanish Linguistics at UC Riverside, as our keynote speaker for the 48th Annual Linguistics Colloquium, which will take place on April 25th. Please see the abstract for the keynote address below, and stay tuned for more details about the event!

Critical Sociocultural Linguistic Literacy (CriSoLL): Transformative and Liberating Spanish Language Research and Education – Keynote address by Dr. Claudia Holguín Mendoza

This presentation provides an overview of the Critical Sociocultural Linguistics Literacy (CriSoLL) theoretical and pedagogical approach. It also presents CriSoLL background research consisting of results from sociolinguistic studies investigating different degrees of linguistic awareness among Latinx Spanish-English bilinguals and Spanish Heritage Language learners that vary generationally and across different categories of stigmatized Mexican Spanish elements. These findings suggest the importance of promoting symbolic competence and critical literacy among Spanish language researchers, educators, and students regarding how language and power structures operate.

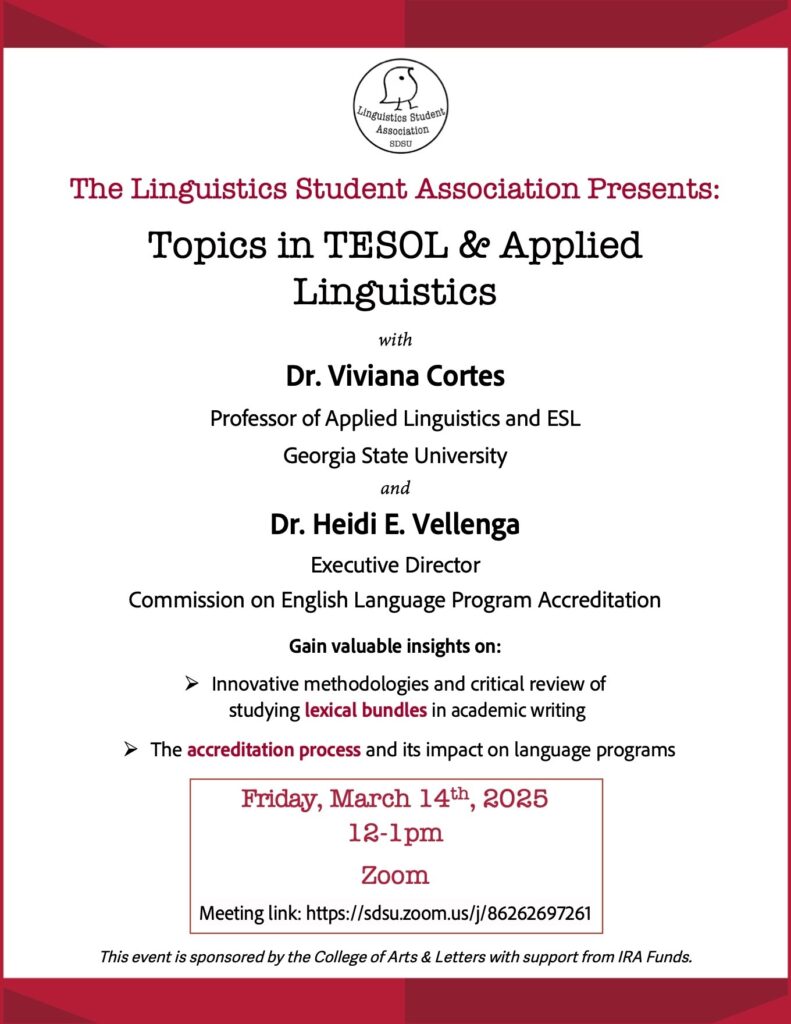

Meeting link: https://SDSU.zoom.us/j/86262697261 [SDSU log in required]

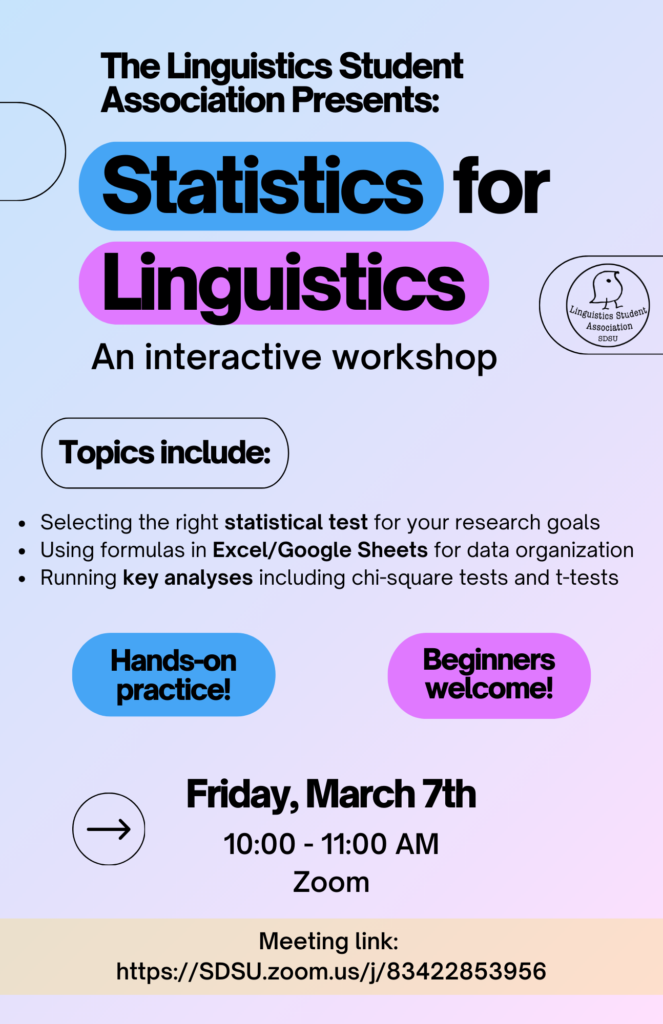

Please join us for an interactive online workshop on Friday, March 7th at 10am on Zoom. Students from all departments are welcome to join! Zoom link: https://SDSU.zoom.us/j/83422853956 [SDSU log in required]

Dr. Soonja Choi

Department of Linguistics

University of Vienna

and

Department of Linguistics and Asian/Middle Eastern Languages

San Diego State University

11:00am-12:00pm

March 20, 2019

SH 109

Abstract

Regardless of culture and language, we routinely talk about the events we experience and make efforts to be clear and efficient in our communication (Grice 1975). This is true of one of the most frequent event types, motion events (Talmy 1978) having to do with movement of objects, such as putting cup on table. In these events, the moving object (e.g., cup) is the Figure and the reference object (e.g., table) is the Ground. The two entities have distinct perceptual properties and assume conceptually asymmetric roles: Figure(F) is the entity moving along a trajectory (e.g., onto, into) whereas Ground(G) serves to be the non-moving reference frame.

Comparing between German and Korean speakers, I present variation in linguistic description and cognitive behaviors for motion events. In particular, I examine (i) the degrees to which German and Korean speakers differentiate between F and G semantically (spatial terms) and syntactically (grammatical roles: subject, object) and (ii) their eye-gaze and memory patterns of F and G. In the linguistic study, participants described dynamic video events involving two objects that systematically switched their F-G roles (e.g., put cup(F) on table(G) and put table(F) under cup(G)). German speakers used distinct spatial terms (e.g., auf ‘on’, unter ‘under’) for opposing F-G relations, thus encoding the F-G asymmetry. In contrast, Korean speakers frequently used the same terms (e.g., kkita ‘fit.tightly’) and the same syntactic constructions regardless of the switches in F-G roles. These crosslinguistic differences were more evident for Non-typical events (put table under cup) than for Typical events (put cup on table), showing that linguistic encoding interacts with degree of familiarity of these events in the real world. The differences also reflect language-specific spatial semantics and differences in the way the two languages perspectivize/contextualize the Figure-Ground relation.

German and Korean speakers also differed in perceptual/cognitive behaviors: German speakers looked longer at the Figure particularly in Non-typical events (compared to Typical events), but Korean speakers showed no such difference. In the memory test, German speakers were better than Korean speakers in remembering which object moved, i.e., the Figure. I relate these behavioral differences between German and Korean speakers to their differences in linguistic representation of Figure and Ground.

References:

Choi, S., Goller, F., Hong, U., Ansorge, U., and Yun, H. (in press). Figure and Ground in spatial language: Evidence from German and Korean. Language and Cognition.

Grice, P. (1975). Syntax and semantics.

Talmy, L. (1978). Figure and ground in complex sentences. In J. Greenberg, C. Ferguson, & E. Moravcsik (Eds.), Universals of human language (pp. 627–649). Stanford Univ. Press.

Thiering, M. (2015). Spatial semiotics and spatial mental models: Figure-Ground asymmetries in languages. (Applications of Cognitive Linguistics). Berlin: Mouton de Gruyter.

This event is co-sponsored by the Dept of Linguistics and Asian/Middle Eastern Languages and the SDSU Linguistics Student Association, and is supported by the College of Arts & Letters Instructionally Related Activities Fund.

Dr. Will Styler

Linguistics Department

University of California, San Diego

3:00pm-4:00pm

March 8, 2019

SH 109

Abstract

Machine learning, the use of nuanced computer models to analyze and predict data, has a long history in speech recognition and natural language processing, but have often been limited to more applied, engineering tasks. This talk will describe two more research-focused applications of machine learning in the study of speech perception and production.

For speech perception, we’ll examine the difficult problem of identifying acoustic cues to a complex phonetic contrast, in this case, vowel nasality. Here, by training machine learning algorithms on acoustic measurements, we can more directly measure the informativeness of the various acoustic features to the contrast. This by-feature informativeness data was then used to create hypotheses about human cue usage, and then, to model the observed human patterns of perception, showing that these models were able to predict not only the utilized cue, but the subtle patterns of perception arising from less informative changes.

For speech production, we’ll focus on data from Electromagnetic Articulography (EMA), which provides position data for the articulators with high temporal and spatial resolution, and discuss our ongoing efforts to identify and characterize pause postures (specific vocal tract configurations at prosodic boundaries, c.f. Katsika et al. 2014) in the speech of 7 speakers of American English. Here, the lip aperture trajectories of 800+ individual pauses were gold-standard annotated by a member of the research team, and then subjected to principal component analysis. These analyses were then used to train a support vector machine (SVM) classifier, which achieved a 96% classification accuracy in cross-validation tests, with a Cohen’s Kappa showing machine-to-annotator agreement of 0.79, suggesting the potential for improvements in speed, consistency, and objective characterization of gestures.

These methods of modeling feature importance and classifying curves using machine learning both demonstrate concrete methods which are potentially useful and applicable to a variety of questions in phonetics, and potentially, in linguistics in general.

This event is co-sponsored by the Dept of Linguistics and Asian/Middle Eastern Languages and the SDSU Linguistics Student Association, and is supported by the College of Arts & Letters Instructionally Related Activities Fund.